The Politics of Technology: Stochastic Parrots

It has been our misfortune the information technology revolution, it is nothing short of a revolution, has to this point been overwhelmingly measured by the values of industrialism. For example, it is no coincidence the major revenue source for Google, Facebook, and Twitter is advertising. The value of the technology's information and communication elements, which critically might in fact not be very great, are measured only in relation to their ability to increase resource consumption. Such valuing, advanced by powerful centrally controlled corporations, has detrimentally warped the technology's development.

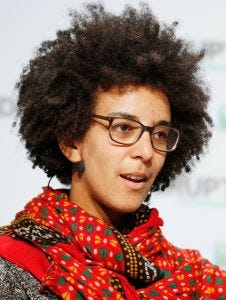

A paper on artificial intelligence released in 2021 entitled “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?,” represents the constraints of our thinking and values concerning information technology, which offers or threatens, depending on one's perspective, to radically alter society. These constraints were sharply revealed in how the paper was first recognized. Upon publication, one of its authors, Timnit Gebru, was forced to leave her employer, Google. Just as unfortunate, the paper’s authors write, “This paper represents the work of seven authors, but some were required by their employer to remove their names.”

Such power to constrain speech, opinion, and association should be concerning to every citizen. It bodes ill for any society for something as fundamental as speech to be controlled by centralized power, certainly it is anathema to any notion of democracy. Massive hierarchically controlled corporations are industrialism's greatest social innovation. They are themselves technological innovations, constructs of technology. As such, they have continually evolved rather effortlessly to control ever new generations of technology, adapting to and greatly influencing how technology develops. The always undemocratic structure of the industrial corporation combined with this new generation of technology threatens to centralize power beyond any historical precedent.

The paper is well worth reading. It focuses on the software, that is the algorithmic programming of information retrieval and the resulting data organizational processes of AI. No matter what’s promoted, AI's results are entirely defined by the data it gathers and how this data is then organized. Skewed data skews results, while how the results are organized influences meaning, just like natural intelligence.

Results can be skewed by initial data sets and the algorithms used to organize the data, both determine results. The data and its algorithmic manipulation can be human or machine prejudiced. AI's programmed data gathering and organization is called language models (LMs), helpfully defined in the paper as “systems trained to predict sequences of words (or characters or sentences).” The programs “differ in the size of the training datasets they leverage and the spheres of influence they can possibly affect. By scaling up in these two ways, modern very large LMs incur new kinds of risk.” No matter how large, all AI LMs are constructed, designed, “trained,” and dependent on specific data. In every way, the data can prejudice results.

The paper correctly identifies concerns stating,

“However, in both application areas, the training data has been shown to have problematic characteristics resulting in models that encode stereotypical and derogatory associations along gender, race, ethnicity, and disability status.”

Again, all AI results are determined by the data used and the design of the programs manipulating the data, even if the program itself is designed by the machine. The authors correctly conclude, “In summary, LMs trained on large, uncurated, static datasets from the Web encode hegemonic views that are harmful to marginalized populations.”

The authors add,

“Contrary to how it may seem when we observe its output, an LM is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot.”

At this point, artificial intelligence is better understood as automated intelligence.

The paper's arguments are just as revealing of its authors’ political limitations. Our contemporary cultural politics of “gender, race, ethnicity, and disability,” politically limit the authors, though they seem completely unaware of this limitation. The authors are biased by the parameters of popular politics. In regards to “hegemonic views,” corporate power is not mentioned. Not to deny the importance of any given issue the authors highlight, but the exclusive focus on cultural issues limits the politics necessary to truly judge, value, and develop the technology, including regarding the authors' own concerns. Such limitations in assessing the technology unhelpfully bounds the politics of technology just as tightly as the exclusive focus on cultural issues constrains much contemporary politics.

The second aspect of contemporary popular politics the paper addresses is environmental concerns, which in many ways are as a difficult and loosely defined concerns as cultural ones. Specifically, the authors address the question of energy use and indirectly, this is a major fault of the paper, the hardware architecture of AI. The authors write, “Training a single... base model on GPUs (Graphics Processing Units) was estimated to require as much energy as a trans-American flight.”

They continue,

“The majority of cloud compute providers’ energy is not sourced from renewable sources and many energy sources in the world are not carbon neutral. In addition, renewable energy sources are still costly to the environment, and data centers with increasing computation requirements take away from other potential uses of green energy, underscoring the need for energy efficient model architectures and training paradigms.”

It needs to be stressed, computing has followed the formidable industrial model of centralized control and production, both entirely reliant on massive energy consumption. In this regards, it is a great failing of the paper to not better address the centralized compute architecture of “the cloud,” a grave concern at the very core of addressing all the technology's politics. Both the cultural and environmental concerns the paper confronts can not be addressed without coming to terms with the fundamentally undemocratic design of computing and the industry. AI is totally reliant on energy intensive, brute force compute, similar in many ways to the brute force of industrialization, promising nothing as much as greater centralization of political, economic, cultural, and environmental power.

A recent article in the Wall Street Journal hits on the problem,

“A shortage of the kind of advanced chips that are the lifeblood of new generative AI systems has set off a race to lock down computing power and find workarounds. The graphics chips, or GPUs, used for AI are almost all made by Nvidia.”

“That situation has restricted the processing power that cloud-service providers like Amazon.com and Microsoft can offer to clients such as OpenAI, the company behind ChatGPT. AI developers need the server capacity to develop and operate their increasingly complex models and help other companies build AI services.”

The technology is extensively centralized in large server factories marketed as “the cloud,” operating GPUs containing 54 billion transistors that are immensely energy intensive, all controlled by a diminutive few mega-corporations. This is the reality in direct opposition to the fairy dust spread by Tech's next generation pixie, Nvidia CEO Jensen Huang. The CEO repeats the industry's now rote marketing fantasy of individual empowerment for the masses, while ignoring the select few controlling and walking away with billions. Huang advertises,

“'Everyone is a programmer now. You just have to say something to the computer,' Huang said in Taiwan on Monday, describing the combination of accelerated computing and generative AI as “a reinvention from the ground up.”

Horseshit, this is no reinvention from the ground up. The whole thing is completely reliant on established centralized energy sources and corporate information infrastructures, promising to become both more energy intensive and centrally controlled.

While having little understanding of politics, or at least democracy, the Tech Industry certainly has a love of money and power. Far too influenced by America's second half of the twentieth century's juvenile culture of individualism, the industry has simultaneously ignored or worse denigrated the importance of human association. Society's structures, its organization—technological, cultural, economic, and political—define every individual's identity and if it's not democratically organized, there is no democracy. This is a limitation or not a concern in the understanding of not just AI's advocates, but as “Stochastic Parrots” shows, the industry's internal critics.

Industrialization transformed both human society and much of the face of the planet. In ways, information technology has promised to be just as transformative, not just in its direct interactions with people, but indirectly with ever greater communication between machines. Make no mistake, the greatest power of present AI will be removing more and more people from established systems and replacing them with machine organization, centralizing ever greater power in ever fewer hands.

We, humanity, have never understood technological development doesn't take place in a vacuum. It was an understanding lost to the Industrial Era largely via simple ignorance, but in recent decades, with the growth of greater biological knowledge, it has become knowledge blatantly ignored. It is the understanding of life at the heart of Darwin's theory of Natural Selection. Every organism is designed and defined by its greater environment. An individual organism, or more accurately a given species, cannot be understood without knowledge of the greater environment from which the species evolved. Simultaneously, the organism becomes part of defining the greater ecological system.

Biological systemic complexity is not stasis, it is constantly interacting and evolving. We have never come to terms with industrialism's impact on both human society and the greater ecological systems from which it evolved, a complete failure to come to terms with dynamic processes where every action within a system plays a role defining the whole system. Information technology has fitted the same blinders.

The authors of “Stochastic Parrots” do a good job succinctly defining this whole systems complexity in regards to communication and language:

“We say seemingly coherent because coherence is in fact in the eye of the beholder. Our human understanding of coherence derives from our ability to recognize interlocutors’ beliefs and intentions within context. That is, human language use takes place between individuals who share common ground and are mutually aware of that sharing (and its extent), who have communicative intents which they use language to convey, and who model each others’ mental states as they communicate. As such, human communication relies on the interpretation of implicit meaning conveyed between individuals. The fact that human-human communication is a jointly constructed activity is most clearly true in co-situated spoken or signed communication, but we use the same facilities for producing language that is intended for audiences not co-present with us (readers, listeners, watchers at a distance in time or space) and in interpreting such language when we encounter it. It must follow that even when we don’t know the person who generated the language we are interpreting, we build a partial model of who they are and what common ground we think they share with us, and use this in interpreting their words.”

In this paragraph, the authors hit on the importance of whole systems. It is part of the fundamental relativity of existence revealed in the last century by our increasing knowledge of physics and biology. We have learned the fundamental importance of subjectivity, not as nihilism, but as the only way we can understand any specific thing through its relation to other things. The parts of any given system can only truly be understood in relation to the other parts. The fundamental questions of AI are how Homo sapiens, what defines each and every person as a member of our species, interacts with the technology, and how the technology interacts with society and the greater ecological systems of the planet, the systems that designed our evolution.

The paper does briefly conclude with a more helpfully expansive view,

“Likewise, it means considering the financial and environmental costs of model development up front, before deciding on a course of investigation. The resources needed to train and tune state-of-the-art models stand to increase economic inequities unless researchers incorporate energy and compute efficiency in their model evaluations. Furthermore, the goals of energy and compute efficient model building and of creating datasets and models where the incorporated biases can be understood both point to careful curation of data. Significant time should be spent on assembling datasets suited for the tasks at hand rather than ingesting massive amounts of data from convenient or easily-scraped Internet sources. As discussed, simply turning to massive dataset size as a strategy for being inclusive of diverse viewpoints is doomed to failure. We recall again Birhane and Prabhu’s words (inspired by Ruha Benjamin): 'Feeding AI systems on the world’s beauty, ugliness, and cruelty, but expecting it to reflect only the beauty is a fantasy.'”

Like all technology, AI will have a homogenizing effect across society, along with developing very specific power structures. Looking at the short history of computing, these power structures will be even more undemocratic. If AI is to be in anyway democratic it requires a democratic architecture – distributed and decentralized.

The authors stated above “that simply turning to massive dataset size as a strategy for being inclusive of diverse viewpoints is doomed to failure,” adding “significant time should be spent on assembling datasets suited for the tasks at hand.” This is key. The politics of AI require focus on creating new and evolving existing social associations. It is through associations, social organization, where information can be valuably created, edited, and communicated with subjective understanding and technology as a tool. Any sort of democracy requires this. The idea individuals can be empowered through interaction with central power flies in the face of all historical experience. Just as industrialization created the job, roles completely defining an individual's economic identity, so an information intensive society requires roles in the creation, editing, and communication of information, and imperatively, new or evolved associations in which people in these roles can interact in these roles.

In creating or evolving these associations it is the values of politics, the values of citizens that can steer them to a much greater degree than the established values of industrial economy, those of hyper-consumption. These new associations must value the free movement of and access to all information, once a foundation of modern republicanism. Along with equality of communication for every individual as it is known in any democratic assembly. These associations can be horizontally networked together and in constant interaction, a distributed ecology creating a whole reliant on and defined by all its parts, without the need for central control.

Computing has a relative short history. To this point, it has evolved similarly as industrial technology. A development defined not by the greater culture or the environment from which it arose, but overwhelmingly defined exclusively by the technology itself. AI is not a new technology, but part of the evolution of computing. Computing came out of industrialism and industrialism reaches as far back as Homo sapiens ancestors harnessing of fire. Yet, across the whole expanse of human history, technology has not been perceived as a social shaping force, largely held separate from politics instead of being understood as a force fundamentally shaping politics.

Over the last century, our growing knowledge of biology and quantum physics initiated a whole new era of ever more powerful technologies. The knowledge of the sciences themselves has barely impacted popular culture, the technologies increasingly define them. The birth of this new technological era was sharply and unequivocally announced by nuclear weaponry. Three decades ago, British journalist Adam Curtis did a still valuable short documentary on the beginnings of the nuclear era, “A is for Atom.” Near the end of the film, Alvin Weinberg, Director of Oak Ridge National Laboratory (1955-1971), Oak Ridge created the fissionable material for the first nuclear bombs and reactors, wisely comments about developing nuclear power,

“The decision of what was acceptable was not something we technologists can make, it's something the public makes. It never occurred to me to ask this question. I think the basic question is can modern intrusive technology and liberal democracy coexist?”

The answer is clearly no. What we presently conceive democracy, most especially “liberal” democracy, cannot coexist with these new technologies. Each year the proof becomes ever more apparent to anyone caring to look. Evolving democracy remains the only answer for humanity to more healthily develop technology, not just in regards to its social impact, but with the constructive functioning of the technology itself. A politics of technology needs to develop.